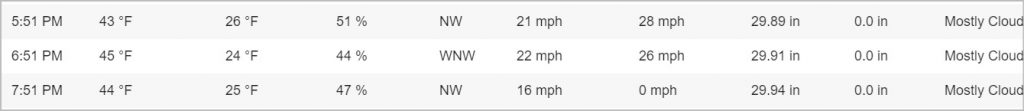

This recording was very different than the one yesterday. The levels were very balanced, though a bit low – it was also a much gentler evening. Completely different attitude from the day before. This change gave me the impetus to add a new processing method to the mix Method G is here (i am not sure where Method F is… I’m sure it will turn up… oh yeah that was the attempt to use Reasons Bounce to MIDI function).

Project Theory

The thing about Method G is that is different from all those before it is that it involves analyzing the audio file, and then software creates MIDI scores for Drum, Melody and Harmony. Now feeding in a lot of random sound, with no set, key, meter, tempo – well that puts a lot of trust into the ALGORITHM (algo for short) to figure it out. As you might expect its pretty glitchy, but it is also very valuable.

Think of it this way – you are an alien and you land on this planet and you whip out your tricorder maybe your… TRICHORDER – it samples the environment and does its best to present you with a representation that will bring the phenomena of this planet into a representation that makes sense to your alien cognition. Maybe it makes up for sensing capabilities you don’t have – like X-Ray or FMRI

Now think about yourself, encountering a new situation. You don’t have a first hand understanding of the situation, but you want to really understand and get involved. So, you put everything at your disposal to work – you translate text – you do image recognition – you analyze the language that might be spoken (is that a language, or just the sound of living?”

At the end of the day, with all the technology but no person to person interaction you are likely to have a very blurry and confused understanding of the situation. What ever decisions you make to benefit the folks in this situation will be based on biased and inaccurate data. You could very quickly cause a disaster. On the other hand, your sensors could pick up something like there are people with congenital hip dysplasia who could be helped with a well understood operation. You can see, what they cannot.

How to we bring these different views and interpretations of the same situation together? My hypothesis is that you have to just keep trying new “lenses”, adjusting the “parameters” and making observation in a very diverse set of conditions….. and then what??? How can you share that? How can you bring that interpretation, its methodology and data and aggregate it with the interpretation, its methodology and data that someone else has?

This is the Knowledge Building issue of our time. Solving this will have the deepest impact on humanities ability to communicate and forge mutual understanding.

The Technical and Music Stuff: Method G:

OK – so when I listened to this recording and was struck by the more mellow attitude, and there seemed to be some improvement in the overall recording, it was time to try a different approach. Originally I was thinking about something what would be a sort of shimmering fog over everything. So, I tried out a bunch of things rather blindly after about 3 hours, I decided to do something I rarely do – go back and read some notes that I had taken. In there was mention of another Output plugin I had not tried, but was a constant presence in my Instagram feed. Signal is what Output calls a Pulse Engine.. so why not try that out – there is a demo version I used today.

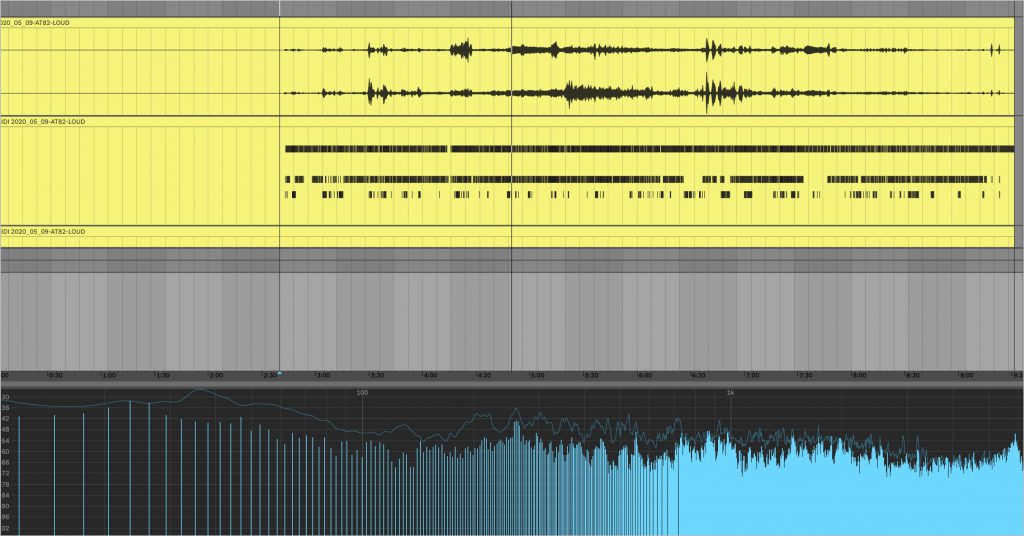

The first thing I realized was that this was not an audio effect, like Movement and the others, this was a MIDI Instrument. So…. I needed to somehow get a MIDI representation of the audio file (WAV, 48,000, 24bit). Reason has a feature “Bounce to MIDI” but this seemed to only give me the everything on a single note. This could not be the state of the art! Well, I did some research and came upon a piece of software from WidiSoft and I gave their demo a whirl. Once I understood the role and usage of the various algos included, I found that I could generate Drum, Melody and Harmony parts. But, I had the demo version – so I needed to investigate further (mostly I wanted to make sure I didn’t already have what I needed – could Scaler2 or Decoda work – they might, but I got a quick easy answer with Ableton Live 10 which has the functionality built in – no fine tuning that I could see – but it worked.

So now I was ready – I created 3 MIDI channels and put an instance of Signal on each. Then I piped the Drum Midi to one, Melody to the second and Harmony to #3. I also had the raw audio going to Master as were the MIDI tracks. It was really very interesting and fun to interact with in finding the setting I ultimately chose. I then just let it play. After V1 was done I wanted to try putting Movement into the Master channel and have every thing go through it. I left the settings to pretty dry so as not to overwhelm. I think the result is pretty good and there is a whole new universe to sounds and technique open to me. It was a good day.

All NYC Data on this day from WolframAlpha:

https://www.wolframalpha.com/input/?i=all+data+new+york+city+May+9+2020

Weather on this day from WeatherUnderground

https://www.wunderground.com/history/daily/us/ny/new-york-city/KLGA/date/2020-5-9

The News of the day from one of the local New York City papers

https://www.nytimes.com/search?dropmab=false&endDate=20200507&query=05%2F07%2F2020&sort=best&startDate=20200509

Nick–This is such an interesting project! Like an old-fashioned time capsule, a set of circumstances captured in the blink of a day. Shackleton’s diary; the links, the news of the day, the atmospheric sounds of the day. And in reading through your posts and listening to your reflections, I appreciate the desire to make a time capsule of this weird time–the good and the bad and the observed.

“Now think about yourself, encountering a new situation. You don’t have a first hand understanding of the situation, but you want to really understand and get involved…”

I wanted to attach the round inkblots I have been making recently because I have been thinking a lot about this–what we do when encountering a new situation. I think our human minds try to make sense of what we see right away–clouds into creatures, cracks in the sidewalk to crazy drawings. As you know, I love this effect. But recently, I have been trying to wait a minute and NOT try to make sense of things right away. I had a conversation with a neighbor yesterday about cultivating awe while walking up to Ft Tryon Park–when we see a tree, instead of identifying it and classifying it, trying instead to just be with it.

Congratulations on the launch of your project. I am a little in the weeds with the technical parts, but count me a follower!

Margaret, thanks so much for the kind and insightful comments. If you have inkblots you would like to add to the project, let me know would be more than happy to include them.. after all part of this is connecting folks and their experiences so we can better understand each other and communicate more richly.

N