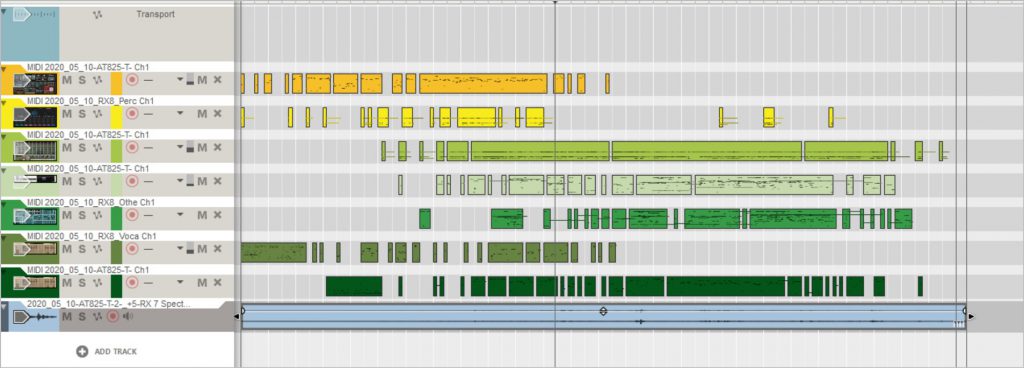

Todays recording could be be called “What the Machines Heard” when you look at the process. From the raw recording I had two tracks of production.

Process and Tech

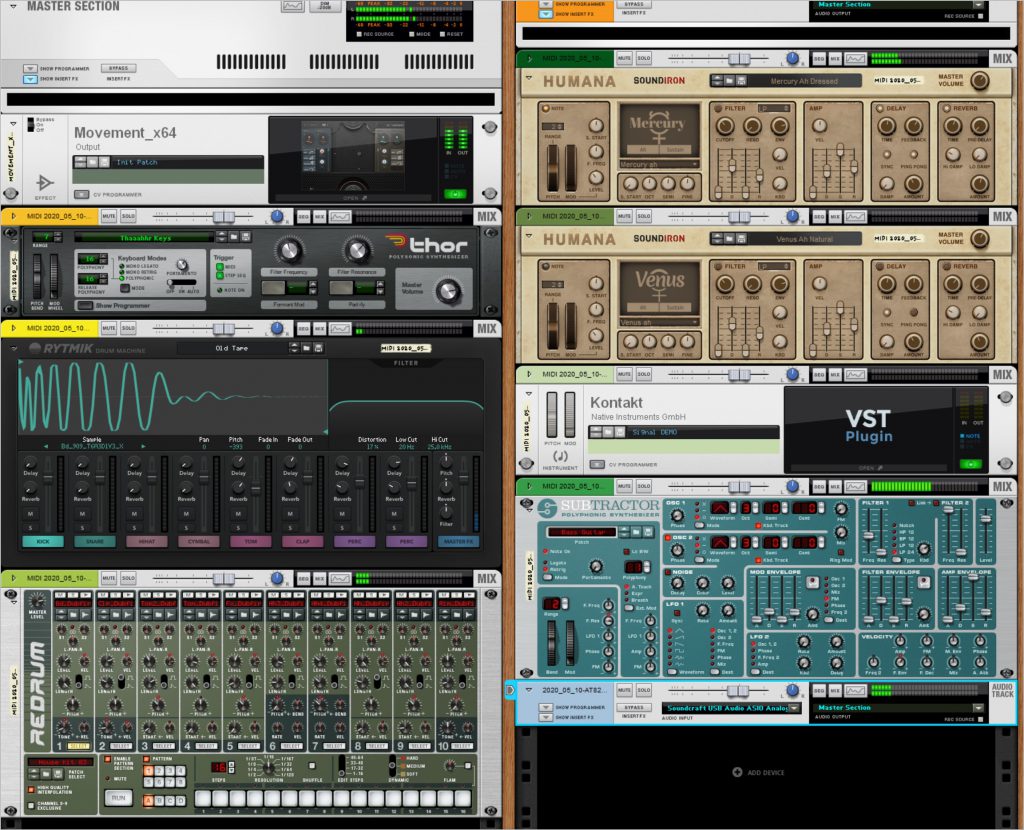

– Process One: Raw recording is then sent through RX8 Music Rebalance which attempts to take an audio stream and tried to identify and isolate, Vocals, Bass, Precussion, and Other and then outputs audio files for each of the components. This is where we can talk about What the Machines hears – and also consider the question of Bias that is implicit in AI/Machine Learning algorithms. That is, the creators of the algo assume that music is made up of voice, bass, drums and other… When that tool is applied to a situation that is not like traditional western popular music, very strange results come out.

– Process Two: Using Ableton Live 10, I loaded the component audio files as well as the raw recording. Then using the Convert to Midi Process – with the outcome being 6 different MIDI files that represent the Harmony, Melody and Drums from the Raw Track. For each of the component files I processed it with a relevant Audio to MIDI process – -so Percussion -> Convert Drums to MIDI, Voice -> Convert Melody to MIDI, Other->Convert Harmony to MIDI, finally Bass was processed as melody.

– With all that, I applied the Midi tracks to relevant synths and then added the raw audio in as a bed. All signals were then sent to the Master bus where Movement was applied with a 50/50 Wet/Dry to give it a bit of a groove. This was all assembled and rendered in Reason 11

I think the results show that there are many, many avenues to follow. This took a long time to pull off because the new opportunities kept presenting themselves. But in a nod to getting the work done and putting it out there, I have not spent time really tweaking the synth sounds. So this – and any other recordings done in this process – offer a very interesting place to experiment with fine tuning the synths.

I also like the synthy “voices” which to me feel like what the aliens would be hearing as the way we speak/sing. And you can hear some of the real voices creeping through the mix.

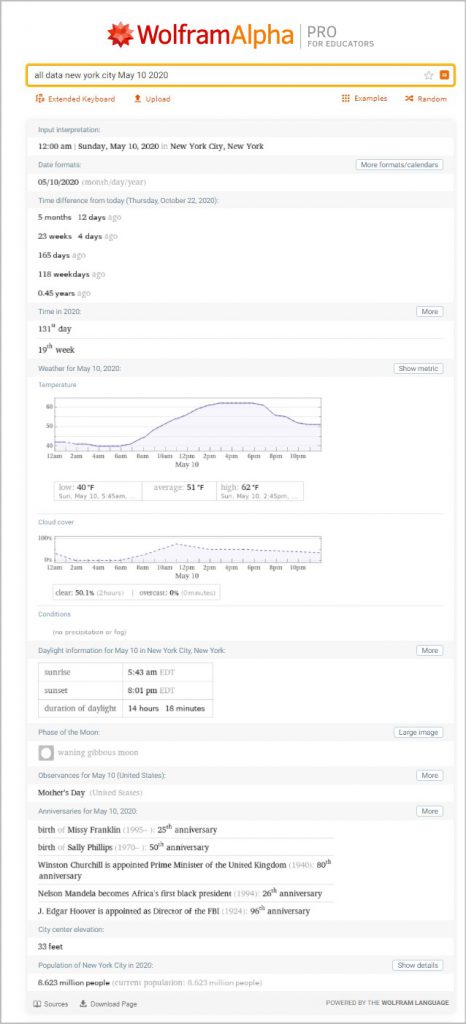

CONTEXT

All NYC Data on this day from WolframAlpha:

https://www.wolframalpha.com/input/?i=all+data+new+york+city+May+10+2020

The News of the day from one of the local New York City papers

https://www.nytimes.com/search?dropmab=false&endDate=20200510&query=05%2F10%2F2020&sort=best&startDate=20200510